The Miles That Matter — Winning the Long-Tail Data Race in Autonomy 3.0

Autonomy 3.0 is a sampling problem, not a miles problem.

INTRO

Autonomous vehicles (AVs) and advanced driver-assistance systems (ADAS) don’t fail for lack of clever models or exotic sensors. They fail for lack of the right data—the rare, messy moments where safety hangs in the balance. We’ve spent a decade debating lidar vs. cameras and planner architectures. All critical. But crashes live in the tails: odd road geometry, freak weather, human improvisation. Picture a pedestrian emerging in dense fog at an unmarked crosswalk—rare, but lethal if your system hasn’t “lived” it. If your training diet skimps on these edges, your model masters the mundane, where lethality is rare.

From maps to models to intelligent sampling

Approaches to building AVs have evolved dramatically over the past decade, with the hunger for training and validation data growing exponentially as a result:

Autonomy 1.0 (c. 2010s):

This era was defined by robotics. AVs relied on high-definition (HD) maps for precise localization, with LiDAR as the primary sensor. The "brains" consisted of hard-coded rules to handle specific situations. Data was primarily used to build maps, not to train driving behavior. Performance was improved by analyzing Pareto charts of disengagements, where safety drivers had to intervene.

Autonomy 2.0 (c. late 2010s):

The machine learning revolution transformed the AV stack. AI, initially confined to perception, began to influence decision-making and motion planning. This "Data- Driven Autonomy," a term we coined at Lyft Level 5, required much larger and more structured datasets to train and validate autonomous driving models.

Autonomy 3.0 (Today):

We have now entered a new phase powered by end-to-end AI. The most advanced teams—including Wayve AI, Waymo's research division, NVIDIA, and even Konboi AI (a French star tup focused on AV trucking)—are building unified models that aim to learn the entire driving task f rom sensor input to vehicle control. This approach is orders of magnitude more data-hungry, but it also unlocks the ability to learn from diverse and heterogeneous data sources, which is a massive advantage.

Simulation amplifies scenarios, but it needs a steady infusion of real-world edge cases to stay honest. Even simulation leaders seek fresh, diverse clips—reality is the seed.

The wrong question:

“How many miles?”

Widely cited safety estimates talk in hundreds of millions to billions of miles. That frames the job as accumulation. But most mi les are statistically boring: lane-keeping, steady following, uneventful highways. The better question is:

How many useful events per potential failure class do you have?

If a specific left-turn conflict appears once every tens of millions of miles, you need thousands of distinct variants —day/night, wet /dry, cautious/impatient— before your model behaves like a seasoned local. Raw totals matter far less than edge-aware sampling.

Two laws of AV data

Long-Tail Tax: Safety debt accrues in events you rarely see. Pay it in deployment and you get interventions and incidents. Pay it in training and you need a data engine that hunts those events efficiently.

Sampling Dividend: A “smart” mile— flagged on-device as non-routine and lifted into your pipeline—can be worth thousands of average miles. The compounding advantage is a falling Cost per Useful Event (CPUE) over time.

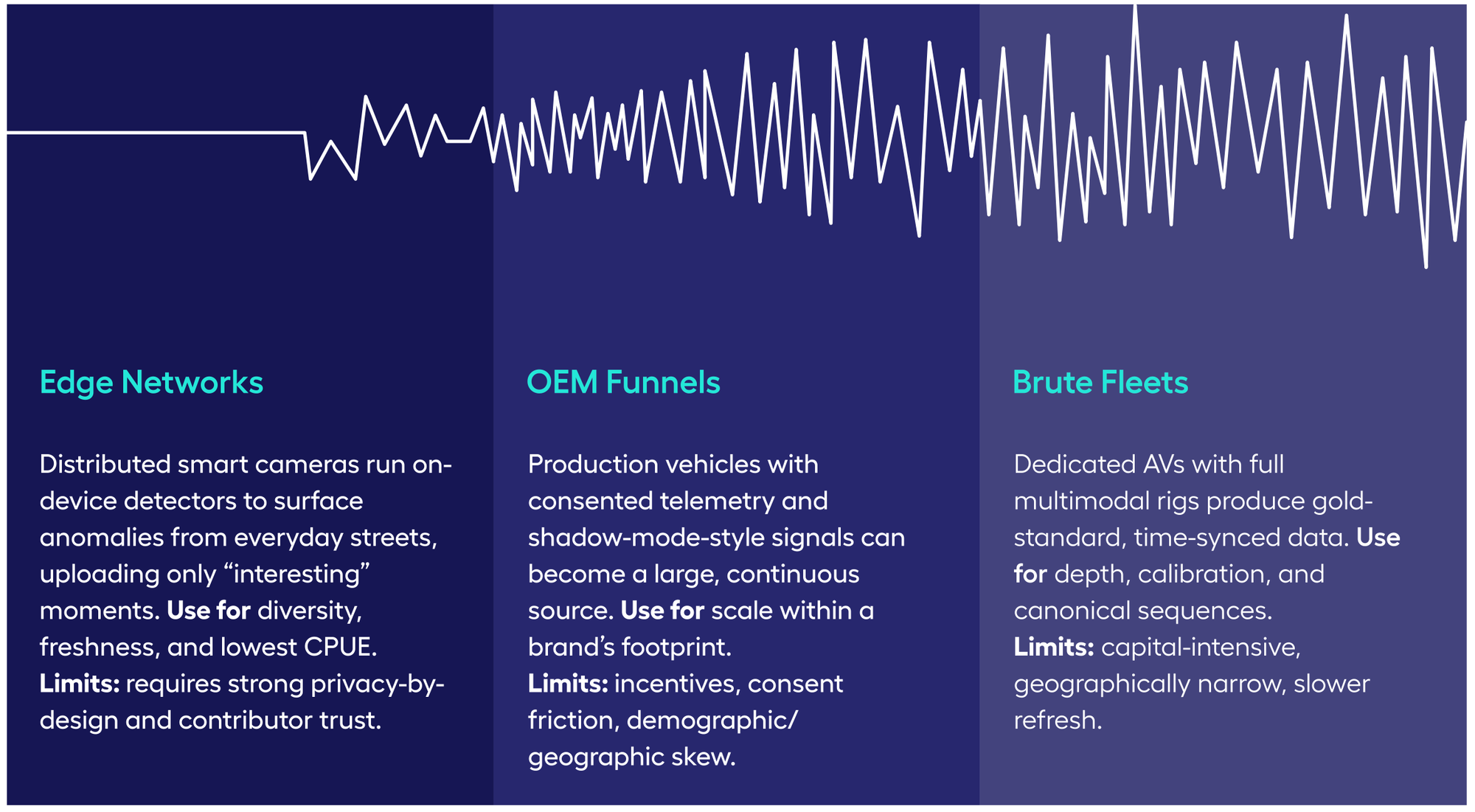

The winning play is blended: fleets for depth, partners for coverage, crowdsourcing for the long tail. Train end-to-end models to generalize across heterogeneity rather than overfit to a single rig. Then use simulation as an amplifier: reality as seed, synthetic as multiplier.

Metrics that actually move safety

Replace vanity mileage with tail-aware metrics your leadership can track:

Maintain a sequestered, tail-heavy evaluation set so you don’t grade on your training data.

If your dashboard skips these, you’re chasing miles, not safety.